Sarah Cashman, Michela Ledwidge and Brett Neilson

The world has been transformed by data. We rely on it. It’s an essential part of contemporary life. Yet we rarely give thought to where it comes from, how it moves around the globe, or the environmental costs of its storage, transmission and processing. What if you could hear information flowing from one data centre to the next? What if each data transaction came with its own unique audio signature? From the gentle resonance of mundane data operations to the eerie sound of hijacks and attacks, the Data Farms sonification was an experiment in giving voice to data and its movements in the Asia-Pacific region.

The Data Farms sonification took the form of an immersive spatial audio application intended for use on a mobile device with headphones. Four or five users would carry their devices into an installation setting defined by a central marker. As they moved around this space, their locations would generate sonic ‘events’ based on real data and the probabilities of these events occurring in actual data centres. By default, these events were not visually depicted so that users could take an exploratory approach, however they could choose to display on their devices a text transcript describing the triggered events.

As it happened, this sonification experiment was stalled by the Coronavirus pandemic. The outbreak hit just as the build was finished. Lockdowns closed galleries and other installation venues. Social distancing protocols disallowed the bringing together of users in a confined space. The experiment is a process prone to failure, but in this case, it was the human and social landscape in which the sonification was to take shape that crashed. Responding to this predicament, this article and the videos that accompany it give a sense of an experience that is yet to be.

Concept

The sonification was developed by Sydney studio Mod in collaboration with researchers from the Data Farms project. Named for ‘modding’ practices in game cultures, Mod is a studio specializing in real-time and virtual production across platforms. Drawing on experience in alternative reality (AR) development, Mod’s creative and technical lead Michela Ledwidge worked with the Data Farms team to conceptualise and execute the build. The application was developed on Unreal Engine and sought to break ground by correlating locationally-generated spatial audio to physical movement through a virtual soundscape.

The challenges of translating the Data Farms research into an amenable user experience were manifest. With research sites in Hong Kong, Singapore and Sydney, the project approached data centres not simply as digital infrastructures critical to contemporary life but also as political institutions that generate distinct forms of power. Central interests were how these facilities generate client footprints that extend beyond national borders and the relevance of these networked territories for data extraction and labour exploitation.

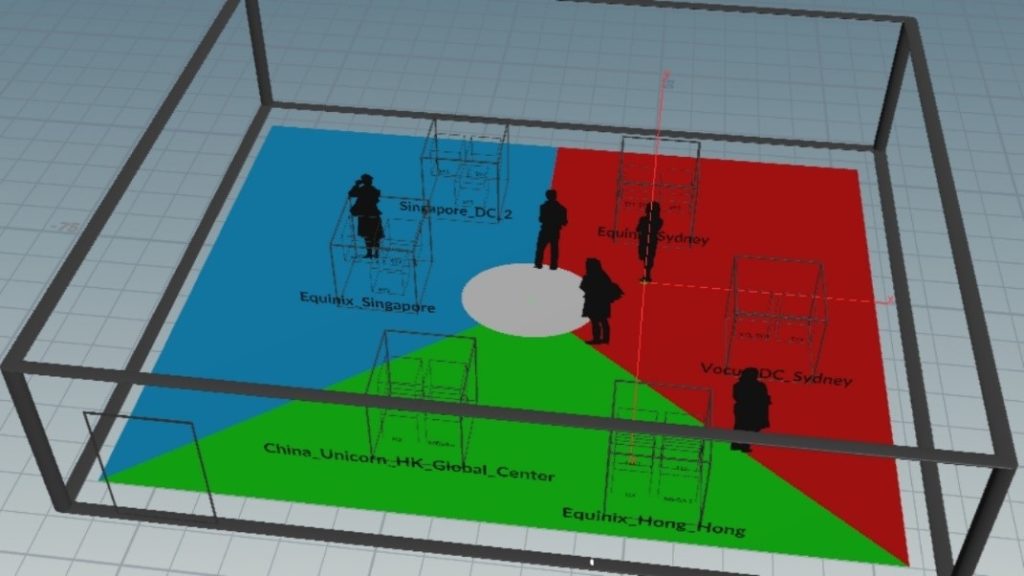

These concerns influenced key design features of the sonification. For instance, the virtual space was divided into three ‘territories’ that represented the project research sites. As users moved between these territories, they would hear an instrumental variation in the sound generated on one of the application’s two tracks. Importantly, on the other track, they could still hear events spawned in the other territories. However, due to their position, they would hear these events with less intensity, volume and tonality than if they were proximate to them. These sonic qualities reflected the project’s interests in data transactions and territory.

Deeply embedded in the application’s design, these auditory variations were not meant to be immediately intelligible to users. Instead, the sonification was intended to provide an aesthetically memorable experience, to provoke a sense of wonder and play that would prompt users to develop greater awareness of the role of data centres in today’s economy, culture and society. To this end, two features were important. First, the experience should be brief, no longer than two or three minutes. Second, the sonification should have musical and cultural integrity. For this latter reason, musician and composer Yunyu Ong was added to the team to craft the sonic elements representing Hong Kong, Singapore and Sydney.

The choice to build a sonification was sparked by a desire to contrast the mainstreaming of data visualisation as a research method and means of knowledge dissemination. The intention was to engage and communicate with non-expert publics on an intuitive and emotional level. However, the expectation was that this experimentation would also allow researchers to stumble across new patterns and questions in exploring data centre operations. Given the high degree of security surrounding these facilities, there was also the hope to open paths of investigation that didn’t require physical access or direct contact with industry, although these were methods pursued in other parts of the Data Farms project. The sonification drew on publicly accessible internet databases as well as an expressly constructed data model that generated plausible events based on a researched portfolio of use cases.

Data model

Sonification is not merely composition. It requires data to sonify. In the case of a sonification about data centres, it would seem that such data is abundant. After all, these facilities are vast sheds for the storage, processing and transmission of data. Yet much data held in data centres is proprietary, meaning unavailable for public use. Those data that are accessible do not necessarily relate to data centre operations just because they are transacted in these infrastructures.

In conceptualising the sonification, data sources such as the registry of Autonomous System Numbers maintained by the Internet Assigned Numbers Authority and the PeeringDB database of network interconnection data were referenced. However, these data relate primarily to the routing of data signals between data centres. Their use needed to be supplemented by a data model that could plausibly generate data centre events and consequence scenarios representing routine transactions made in these facilities.

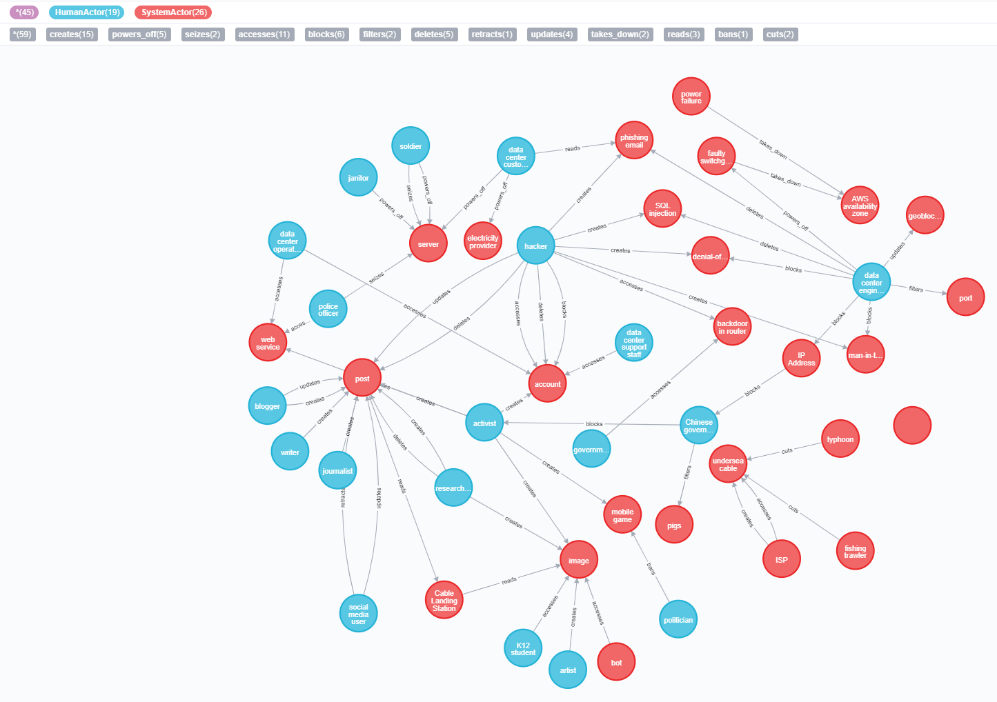

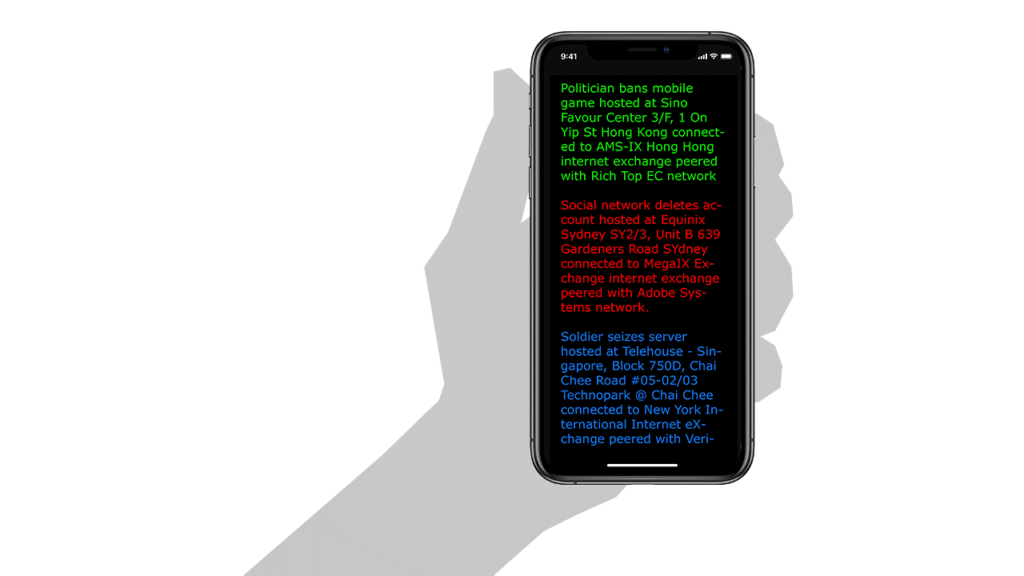

This data model is built on the Neo4j Bloom graph database platform that supports both the public facing app and a stand-alone query tool. The intention of the query tool is its use independently of the sonification to research the relationship between a web resource and associated organisations and territories. The model generates events based on a simple actor, verb, subject syntax; for example, ‘writer posts blog post’, ‘soldier seizes server’, ‘bot deletes social media post’. Permutations of relationships between actors, verbs and subject are editorially managed in the data model. The data is managed according to a list of use cases derived from the Data Farms project research and categories published by Freedom House detailing scenarios of filtering, blocking, content removal, digital attack, and so on.

Events are generated by impact rules and probabilities in the model. The generated events can be thought of as plausible story elements that are combined with data from PeeringDB to assign a legal personality and location to the facility where they putatively originate, for example, ‘hacker removes web service at Telehouse, Singapore, Block 750D, Chai Chee Road’. These prose narrative events are then sent for sonification and to the text transcript interface that users can access while moving through the soundscape.

The sonification process works according to a system of rules that assign musical qualities to events according to the actors involved, impact and probability. For instance, a low probability event will play at a higher pitch. High and medium impact events are more percussive. Sonic qualities are assigned to actors based on the five elements in Chinese philosophy (metal, wood, water, fire, earth). For example, events involving governmental and civic actors are played with woodwinds under the classification of Wood equating to ‘all actors, civil, legal’. Those involving military or activist actors (‘anything metallic, weaponry, defence, boisterous, violent’) are played on metal instruments such as gongs or xylophones. Those involving social media are associated with Water and played on string instruments.

To supplement these procedurally generated sonifications, other events were also triggered by real network activity. Mod built the Data Farms backend as a web service drawing on both the Neo4j data model and a network security service monitoring Border Gateway Protocol (BGP) incidents. BGP messages are received by data centre hardware to clarify available network routes. Where network hijackings or outages occur, the system draws on incident reports to generate short synthesised sonifications with atonal rhythmic qualities. This feature was developed to spotlight network incidents at the specific facilities in the Data Farms data model but was later widened to consider incidents anywhere in the world so that the public user experience would always include some BGP sonification (of the most recent incidents anywhere in the world).

The Data Farms public experience (the sonification) plays on a timed loop and resets with a break after each session. Events play randomly according to probabilities programmed into the model. However, each session includes at least one low probability, high impact event to illustrate the range of sonification options and give a loose narrative structure to the user experience. Consider a rare event such as boat cuts internet cable, an incident with dire consequences for data centre operations. The sonification will play with metal and string instrumentation accompanied by a high pitch drone for low probability and percussion for high impact.

User experience

In an installation setting, users would view a large screen displaying a brief curatorial message about the sonification before entering the soundscape. As they wait for other users to complete their experience, the same screen displays the textual event log generated by the data model. Data Farms is a real-time spatial audio multi-user installation designed for headphones. The sonified data used in each session is procedurally generated from the data model – no two sessions are the same. While a session’s overall composition is the same for all active users, the sonification is different for each user, based on how they physically move in relation to the virtual 3D soundscape. Walking through the installation gives the sensation of moving through different virtual ‘territories’ as passing between defined areas of the soundscape triggers changes in orchestration.

Users enter the installation with their device held comfortably in front of them and with headphones plugged in. Each user must scan a central marker (e.g. QR code) to initiate the experience. Physical movement in relation to this origin is translated into movement through the virtual soundscape. A challenge identified from user feedback is the tendency for visual outputs on the device screen to interfere with the sonification experience. Screen cues are thus kept to a minimum.

A tension exists between musical and compositional strategies of the sonification, on the one hand, and its information transfer goals, on the other. A design-centred approach configures the sonification less as an instrument of research inquiry and more as media art – a medium for an audience with expectations of a functional and aesthetically pleasing experience. The question of how the user experience leads audiences to reflect on infrastructural power and the social, cultural and economic relevance of data centres remains open.

Afterlife

It is a strange prospect to discuss the afterlife of an installation permanently stalled by the pandemic. In many ways, the sonification has yet to see the light of day. Yet there is the possibility to make the application available online as a downloadable AR experience. The Data Farms web serviceitself (including an application programming interface, the data model and network monitoring services) can also be made available. A more costly option is to migrate the mobile AR experience to one that can be experienced online as a multi-player equivalent to the location-based experience we intended. Built on the Unreal Engine, this could involve the use of Pixel Streaming (WebRTC) technology so that any desktop web browser could be used to access the same virtual soundscape and hear the interactions of multiple users. Extended Reality (AR, VR, MR) is a fast-evolving field and Covid constraints suggest new directions for supporting both location-based and online audiences.

The Data Farms sonification is an artefact of collaboration between critical academic researchers/theoreticians and creative programmers/producers. The application bears the marks of this relationship, particularly the difficulties of translating empirically driven conceptual research into technical and informational formats that require strong internal consistency and interoperability between platforms. The question of what data could or should be sonified also haunts the collaborative process. The breakthrough moments were surely earned through episodes of frustration. In the end, what matters is the sound.

Data Farms sonification team: Sarah Cashman, Michela Ledwidge, Brett Neilson, Tanya Notley, Ned Rossiter, Yunyu Ong, Mish Sparks