The work below surveys a range of software that visualizes or simulates data centers and their accompanying infrastructure. Data centers are highly complex systems where server hardware, cooling systems, and electrical networks intersect. This technical complexity and its opacity is not only an issue for the public, but also for industry insiders like technicians who must monitor these systems and maintain their operations. In recent years a range of software has emerged aiming to assist in this task. Visual tools give workers an visual overview of their facilities, while simulation tools provide flexible ways to test new configurations and evaluate performance without the costs and risks of doing this in the “real world”.

Racktables

Racktables is a “datacenter asset management system”. In essence, it s a browser-based application for keeping track of hardware, cables, and their current configurations. Racktables can be deployed for just one data center, or to track resources across multiple data center facilities. Racktables is not a simulator, but has some overlap in that it attempts to visually document the array of complex technical systems that comprise modern data centers – in effect, using graphics to provide a dashboard or “digital twin” of what is happening in a center.

Home screen

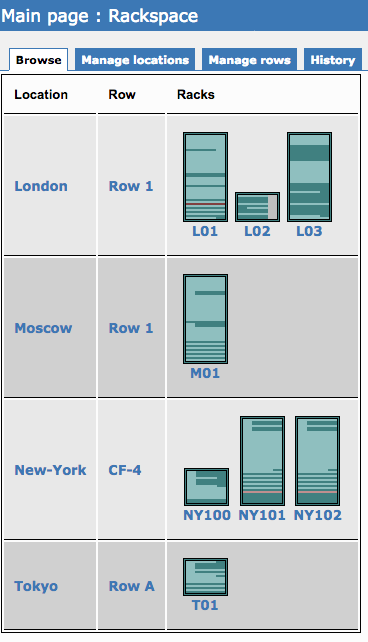

Racks screen from the demo page, showing multiple centers in London, Moscow, New York, and Tokyo.

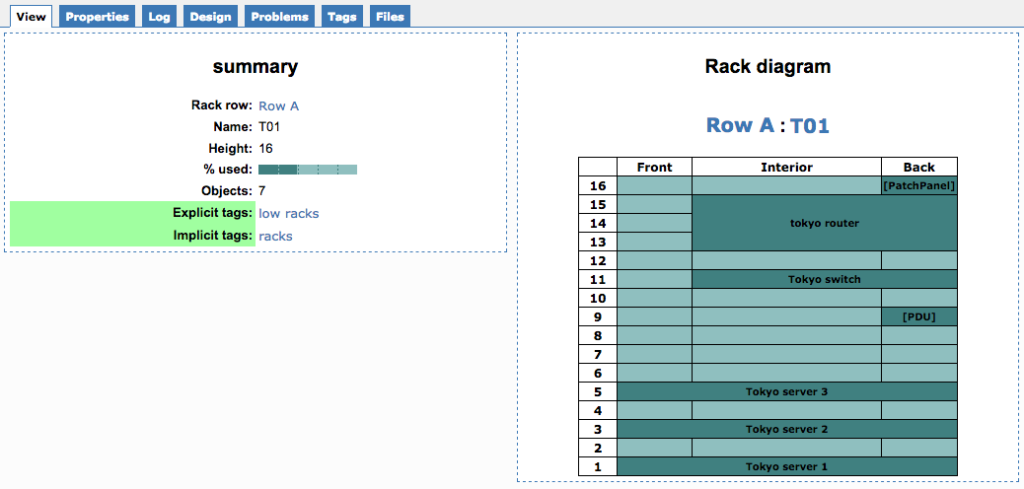

Digging into the view for a single data center – in this case one in Tokyo.

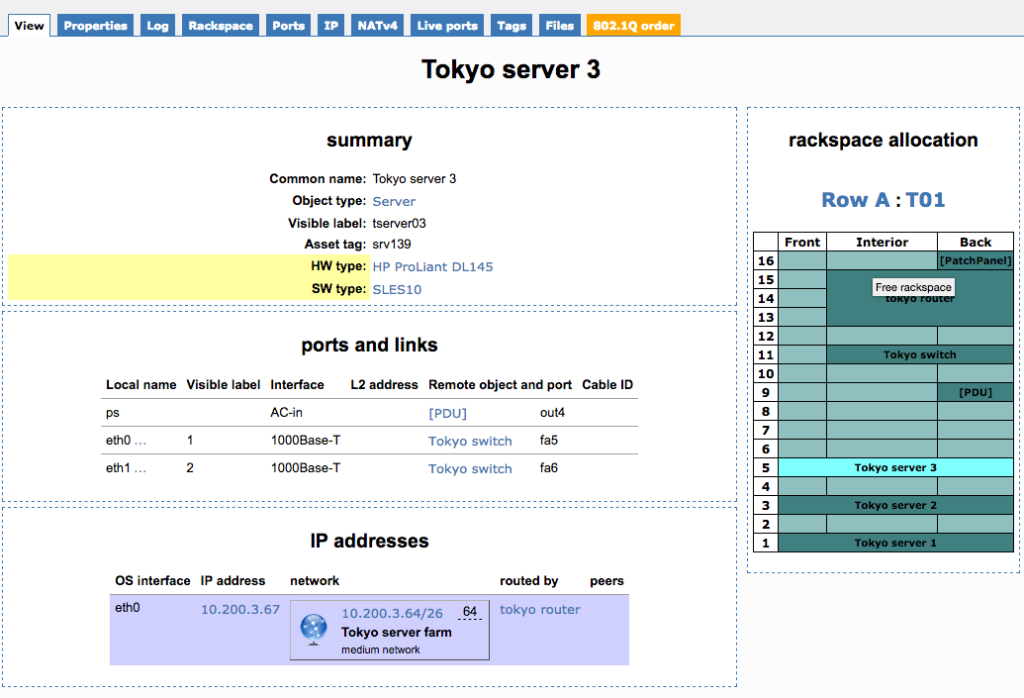

Single server in Tokyo, with notional ports, links, and IP addresses.

Global Infrastructure Simulator

A tool by an MIT Research Group, built to “evaluate the performance, availability and reliability of large-scale computer systems of global scope.” From the brochure: Today’s global information systems are highly customized and involve distributed servers and file stores and sophisticated indexing engines. “Predicting the performance of these systems is extremely difficult”, says Director of MIT’s Geospatial Data Center. “To address these questions we have built a simulator so companies can test potential solutions before spending the money to purchase.” This is proprietary and not downloadable, released around 2010 I believe.

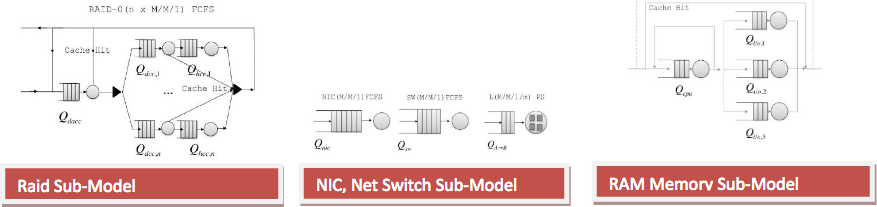

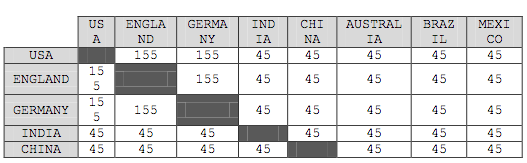

Based on research papers and screenshots, the software includes classes – coded models – of hardware. There is a RAM Memory Sub-model, a RAID (hard disk type) Sub-model, and so on (see above). This allows users to construct a simulated global network of data centers, each with their own configuration of hardware and software.

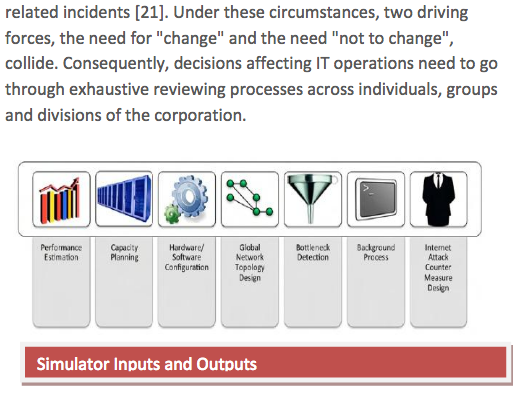

Based on these specifications, the simulator then estimates the response time of user requests, as well as aspects like the network usage and hardware usage for a data center. However it’s worth noting that none of this is visual. Data centers are “defined” in code and tests that are run produce numbers as output. Visual results for academic publications are done with graphing software and the various features of the software are illustrated using clip-art.

One interesting observation in the GIS brochure is this tension between changing and not changing (see screenshot above). On the one hand, the data center needs to remain highly stable (“the need not to change”), keeping everything running 24 hours per day, 7 days per week, 365 days per year without disruptions. Indeed, as we know, high Tier Ratings are based on the facility’s ability to only have minutes of downtime per year. On the other hand, the data center must remain flexible and constantly adapt (“the need for change”). To be successful, a data center must upgrade its hardware, must implement new innovations in software, or at the very least, must stay atop maintenance by swapping out broken servers with replacements. A static data center is one in danger of becoming outdated – passed over in the dynamic marketplace – inefficient in terms of operations, or simply broken if repairs are not made.

OpenDC

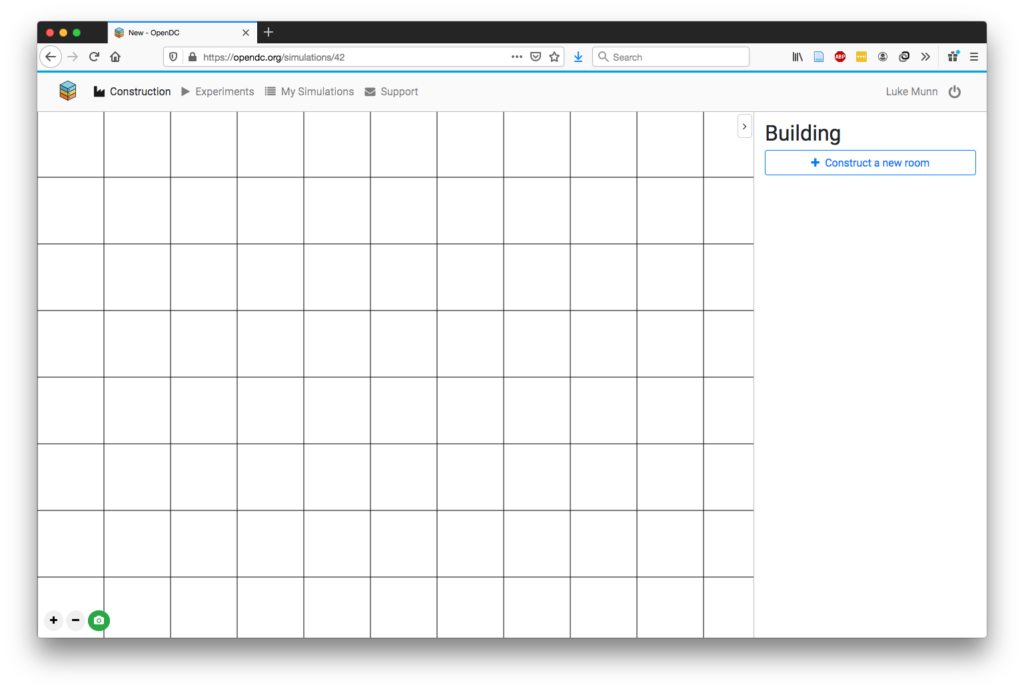

OpenDC bills itself as the “Collaborative Datacenter Simulation and Exploration for Everybody.” Their website opens by stressing the importance of the data center industry based upon its financial value, while recognizing the fact that it has many “hard to grasp concepts” and “needs to become accessible to many.” OpenDC is a project of the @Large Research Group and associated with the Delft University of Technology in the Netherlands.

OpenDC is one of the most visual simulators I found, and actively maintained, though with a very small team. The software is built under open-source principles, so free to access and download. Unfortunately it’s also very buggy. Projects sometimes disappear and then reappear, and the online server went down multiple times, and I had to submit issues on Github to get the developers to fix it.

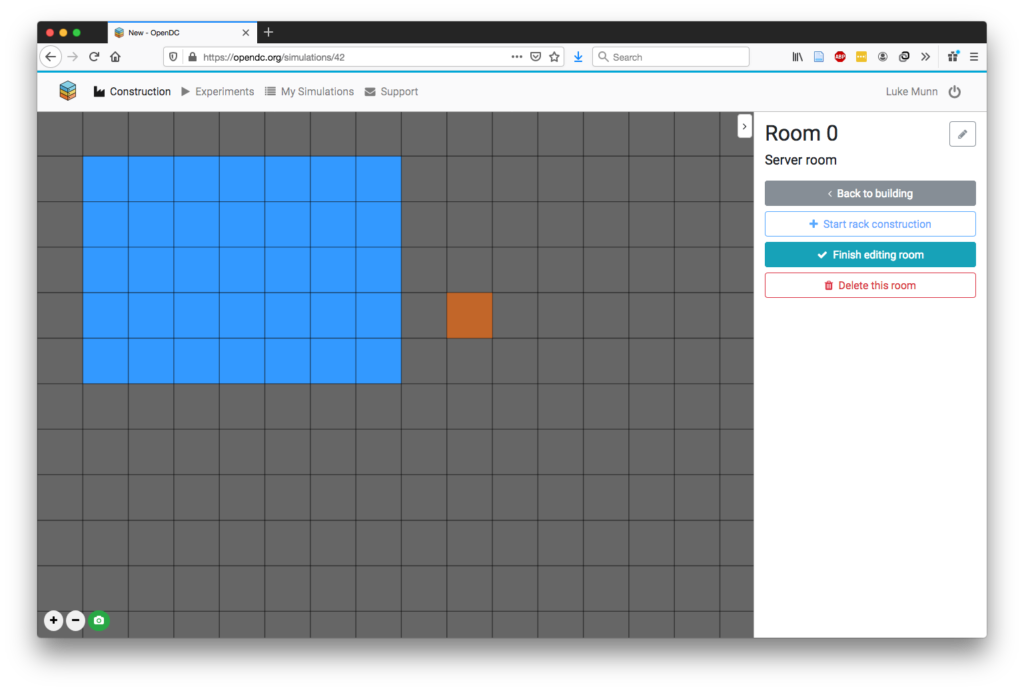

You start at the Build screen, where you can construct a new ‘room’ for your center

You then construct a room of a certain dimension

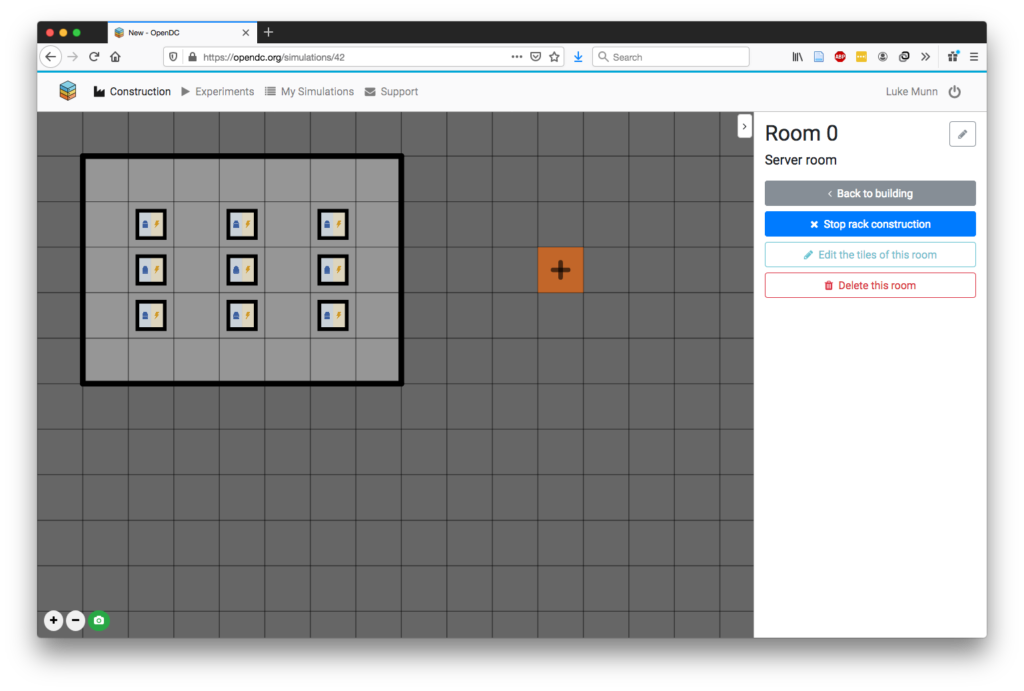

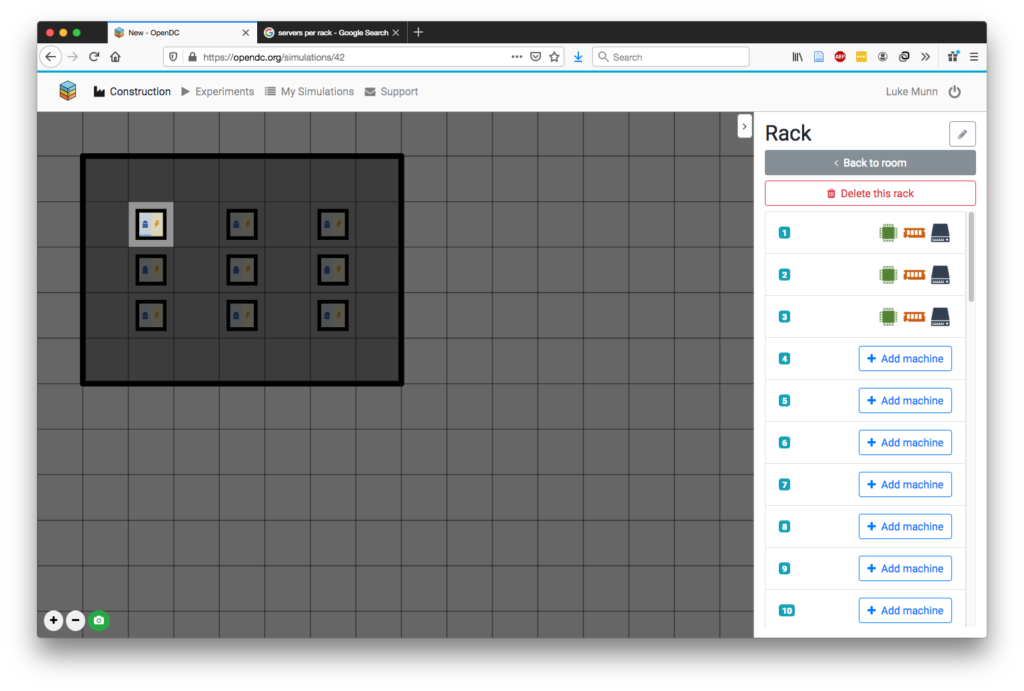

The room is populated with racks – here I’ve setup a 3×3 grid with aisles between. While these kind of steps suggest a strong spatial awareness, in fact the room size and placement of racks seems to not be incorporated at all into the simulation in terms of temperature, performance, etc.

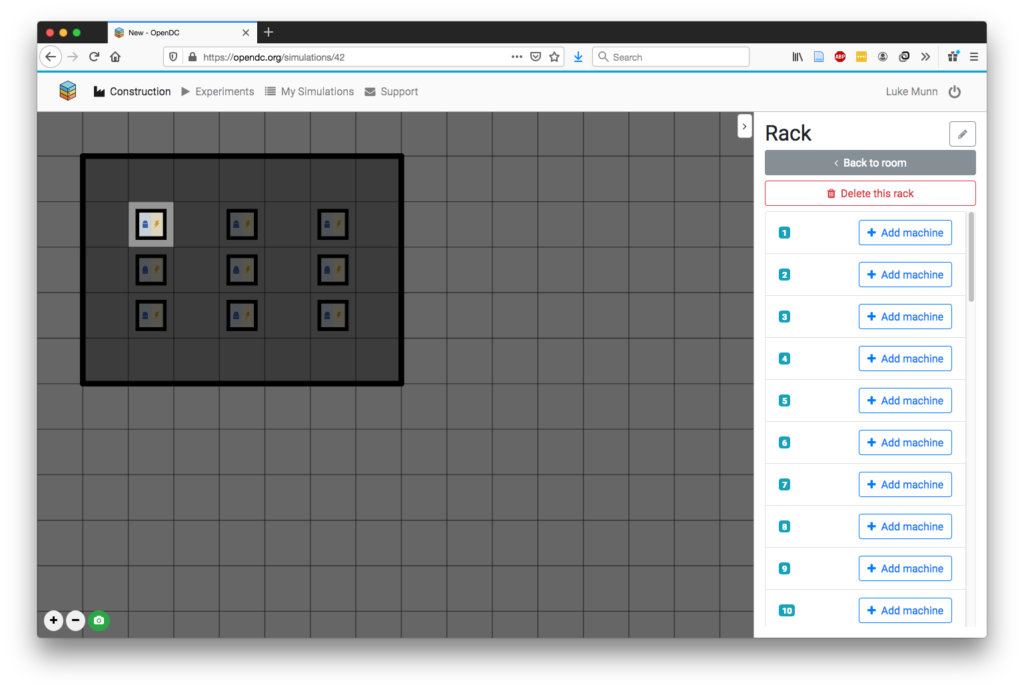

For each rack, you can add up to 32 machines, which AFAIK is maximum unless you have custom racks. In real world many racks I’ve seen have spaces, they use 2N units instead of 1N, the client only needs a “half rack” etc. Indeed a completely filled rack with 1N units would be considered very power/temperature dense I believe.

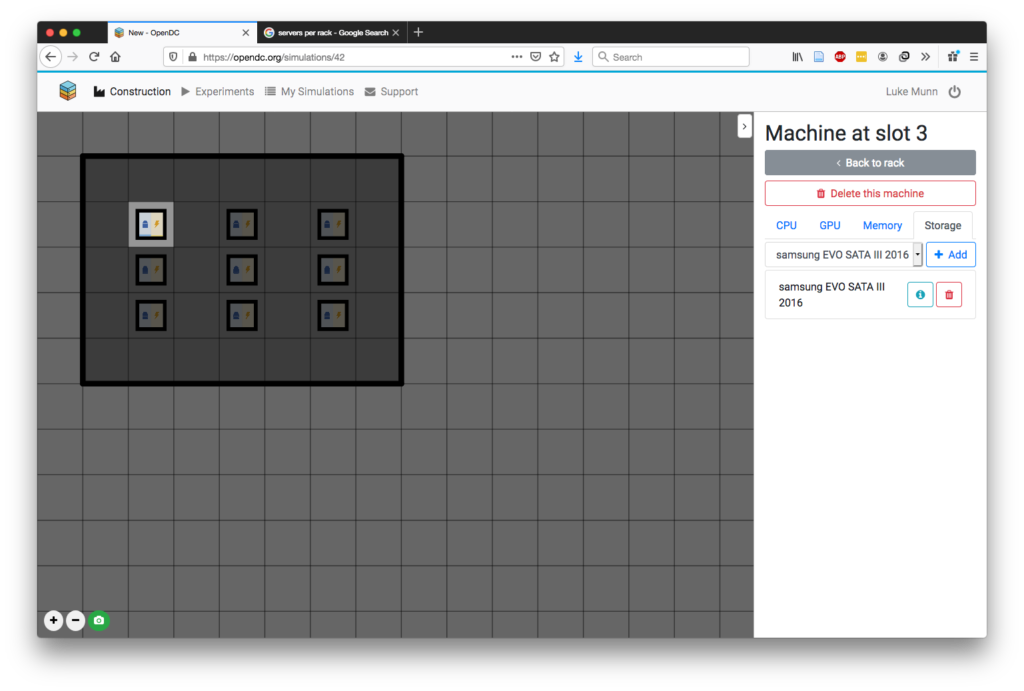

Here I’ve added a hard-drive to a machine on the rack. The specificity of make and model here seems impressive, but in fact, OpenDC only has one hard-drive to choose from.

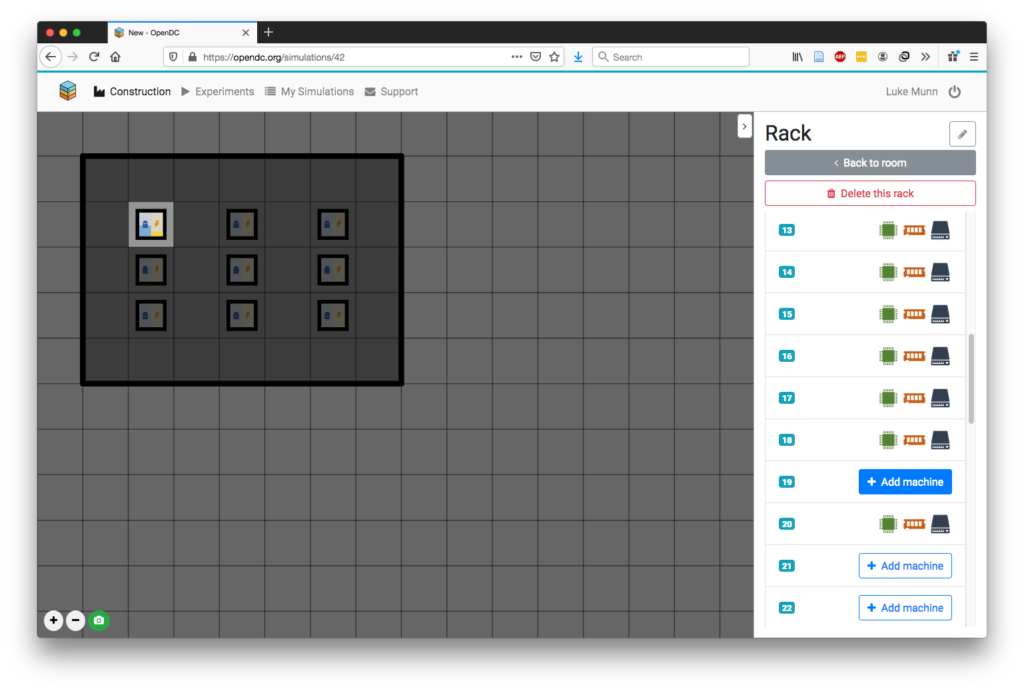

I’ve now added 3 machines to this rack, each with a processor (green icon), memory (orange), and hard drive (grey icon). These do not have GPUs, which can also be added.

I added 20 machines to this rack, all with the same specs.

From previous experiments with this, you now go to “Experiments” tab, choose this Room, and then essentially run a stress-test. You can choose between 4 different benchmarking stress tests. Getting these to actually work is highly challenging – they don’t most of the time. This stress test is then run on the rack, showing a line graph of the rise in CPU processing and heat over 100 seconds (see below for indicative test).

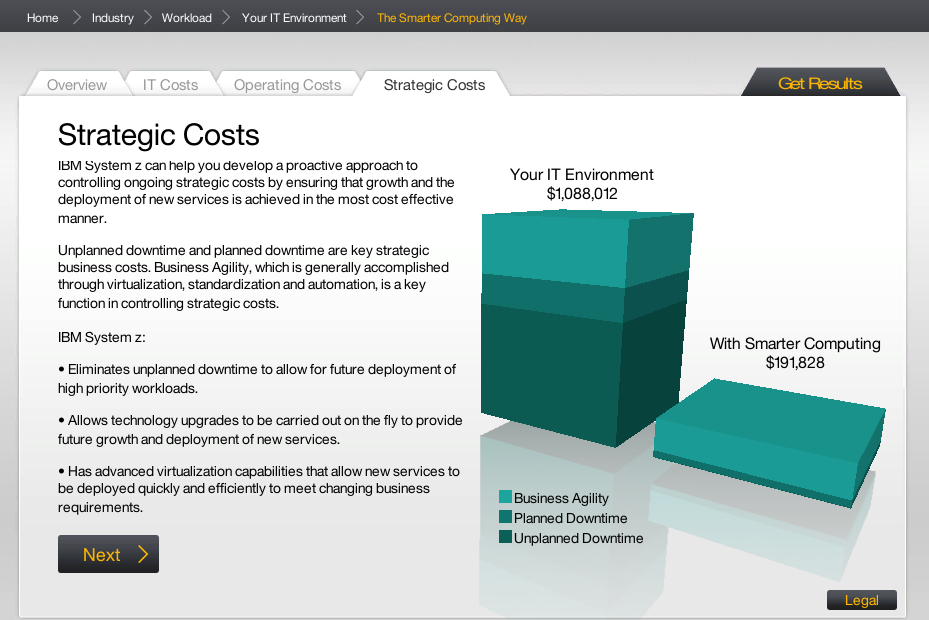

IBM SmarterComputing

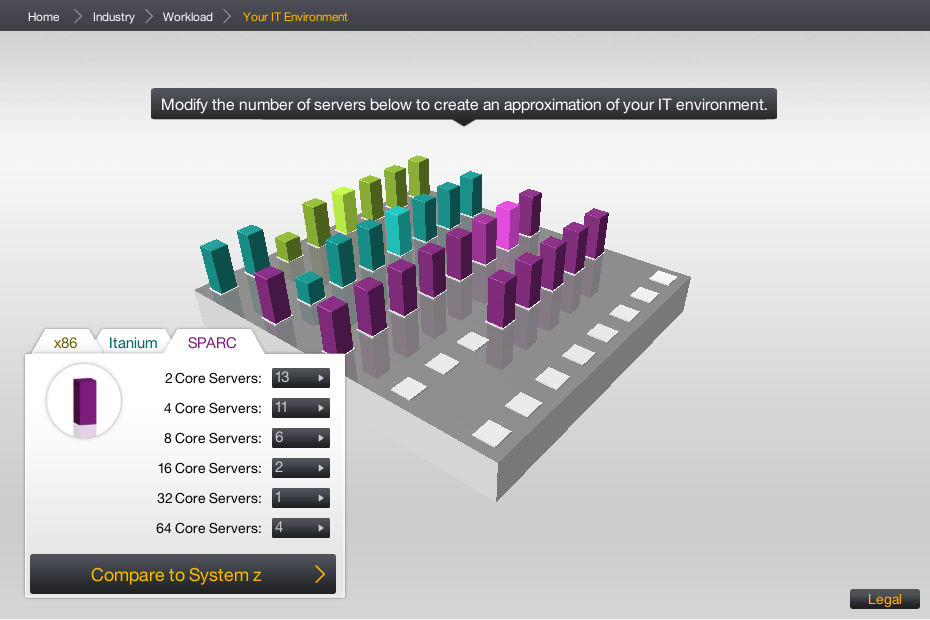

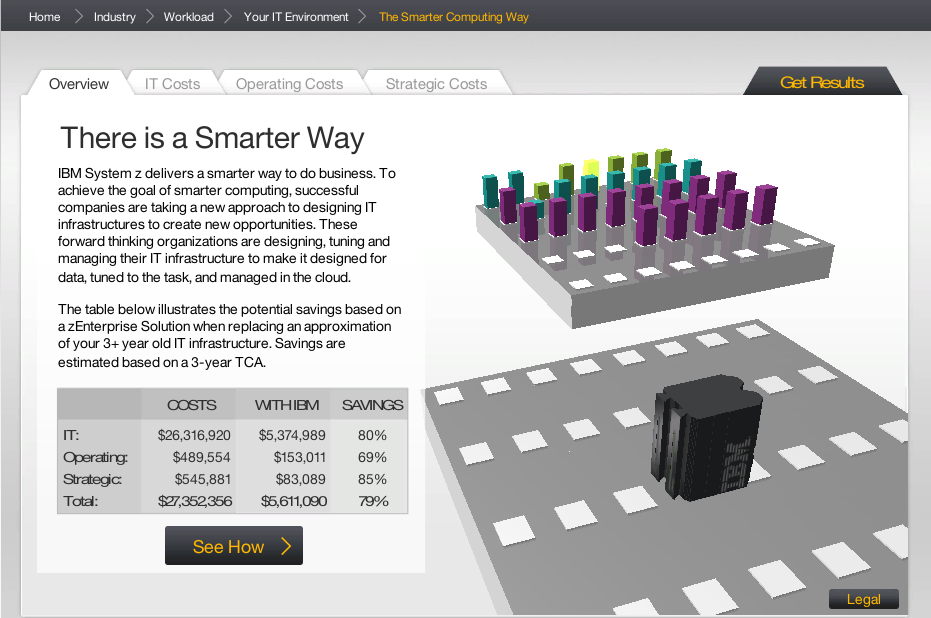

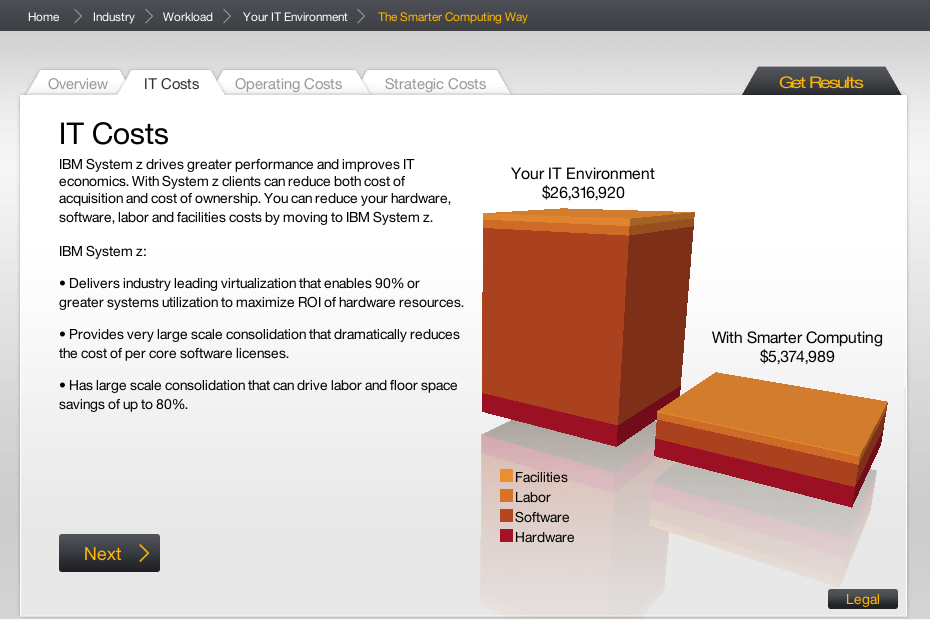

This is basically a light simulator done as a marketing campaign for IBM’s Smarter Computing offering a few years ago. You enter your industry and number of computers, the interactive computes a cost and then shows you how much cheaper it would be with IBM.

As far as I can tell, the workload and industry doesn’t seem to change the simulation (at least this isn’t obvious or signaled in any way). Perhaps just a way of seeming more attuned to specific sectors and their needs.

FireSim

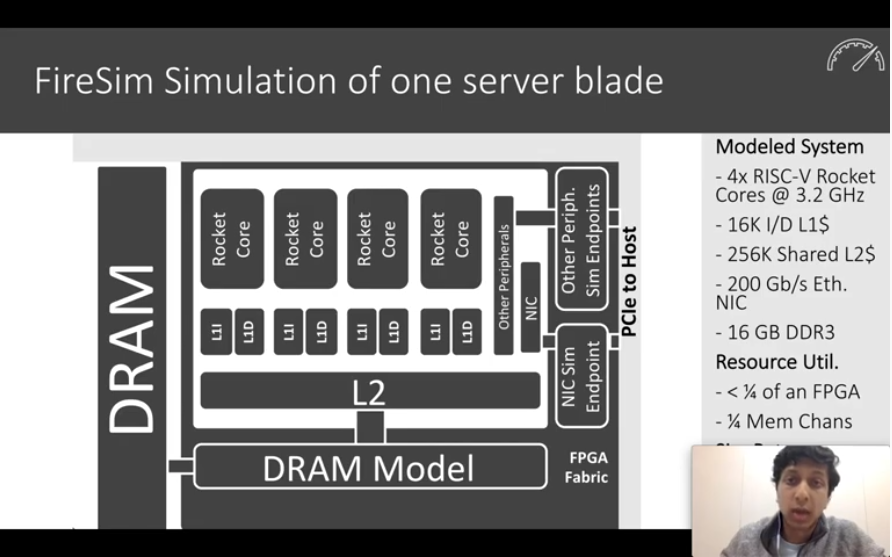

From its page, “FireSim can simulate arbitrary hardware designs written in Chisel… With FireSim, you can write your own RTL (processors, accelerators, etc.) and run it at near-FPGA-prototype speeds on cloud FPGAs, while obtaining cycle-accurate performance results (i.e. matching what you would find if you taped-out a chip). In essence, the idea is that you take a custom hardware design – a FPGA or field programmable gate array, an integrated circuit that can be configured by customers – and then you can simulate the performance of it in the cloud. From prior research, it seems that this kind of custom hardware, while still niche, is growing, with a lot of customers wanting bespoke solutions for their apps at the ‘bare metal’ level. Unfortunately, FireSim can’t be used or downloaded, but there were a couple interesting points here.

One brief note here is that simulation becomes very low level. This is still about cloud computing, but starts with single server blade and builds from that. See next few screenshots….

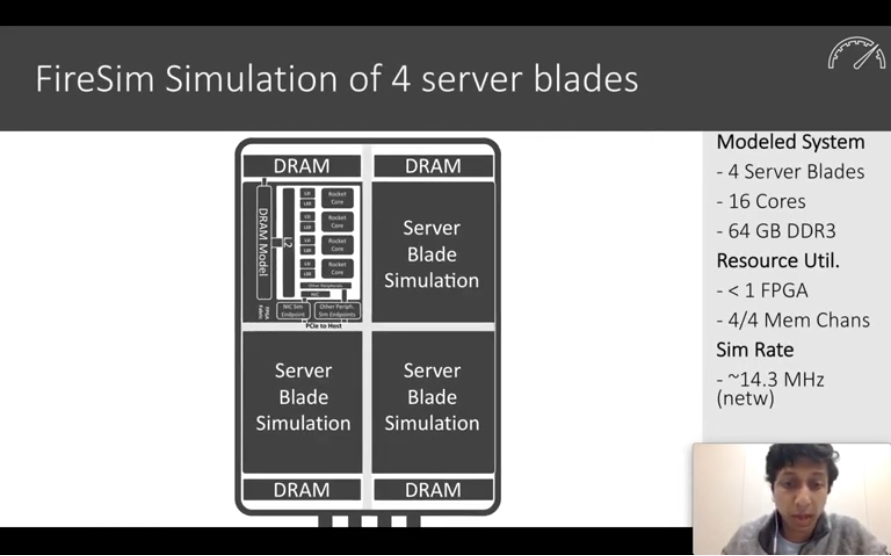

…then put together these components 4 times to form 4 server blades…

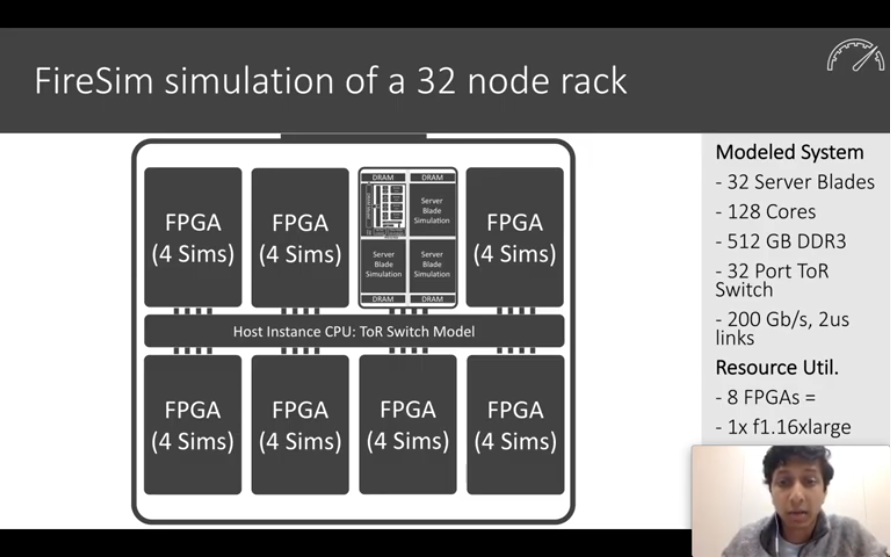

…then whole 32 node rack…

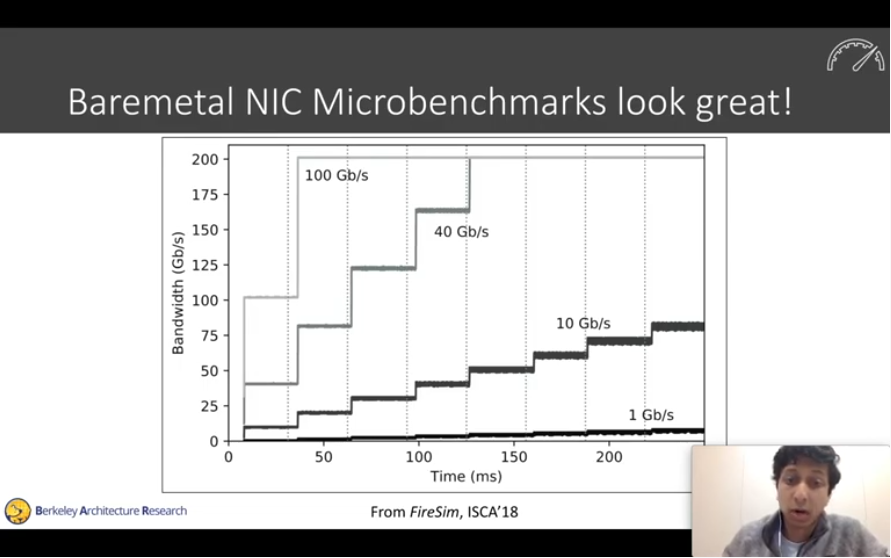

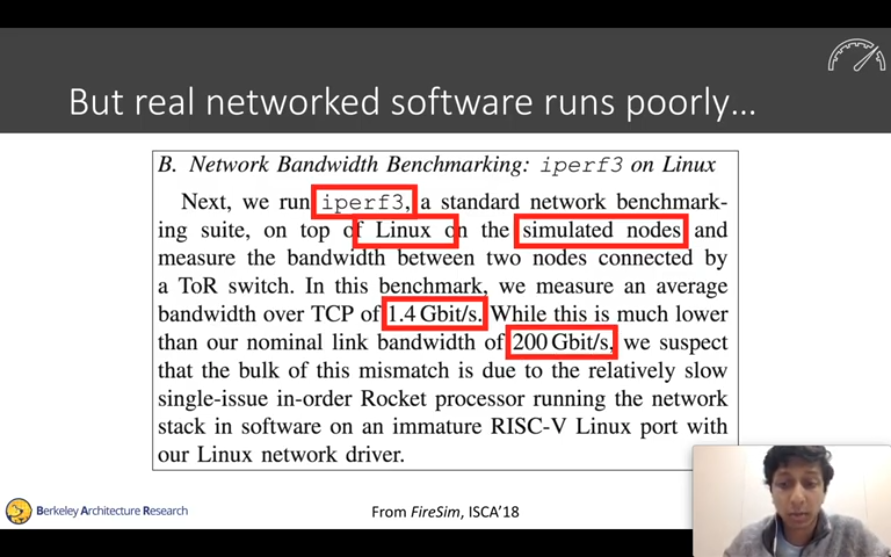

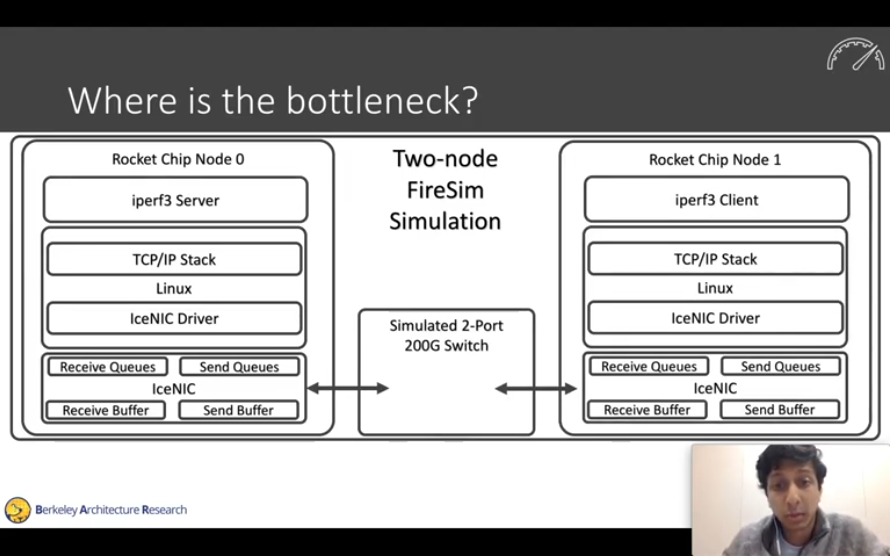

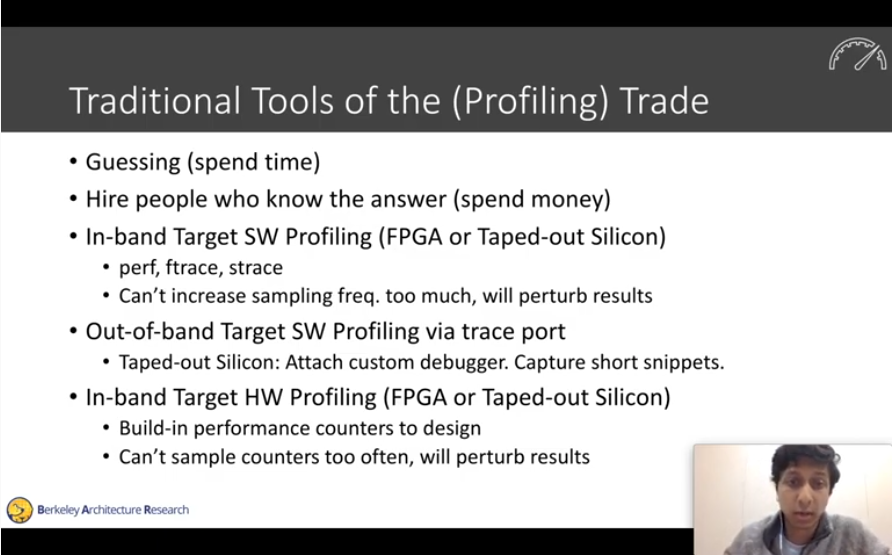

One of the main points that Sagar Karandikar makes in this presentation is the difference between tech specs and real-world performance.

The vision, as with any simulation, is to help you identify problems before committing. Without simulating, you’d have to actually build the hardware and deploy it (‘taped out silicon’), or hire experts to extensively test prototypes and then report back. FireSim provides a way of experimenting with custom hardware while only paying general cloud computing rates.